More intelligent memory access

This is where things get a little more complicated, so we'll ease into it with the less complicated memory tweaks in the Core microarchitecture. Core features better prefetchers. What's a prefetcher? It's an algorithm that works out what data the processor needs to work on next, then goes and gets it from main memory before it's needed. When the processor needs it, it's then right there in cache.This means that the processor is making more reads from cache than main memory - reading from main memory is obviously slower. Of course, since the prefetch algorithm is basically guessing what the processor is going to want next, it's not always right. However, with a better algorithm, as we have here, it's got more chance of being on the money.

The second memory access technology changed is called memory disambiguation. This feature improves the efficiency of the processor by giving it a new capability to re-order instructions more efficiently. To understand how disambiguation works follow this:

Let's add one more thing to our view of microarchitecture: not only does it break down x86 instructions into processor specific ones, not only does it then fuse those together to make less instructions, but it also jigs them around into a different order that might be more efficient. If a micro-instruction is presented to the architecture as XYZ a microarchitecture might decide that the fastest way to work on this is actually YZX. It'll feed the pipes the data in that order, then re-assemble the results into XYZ at the other end of the pipe, before the data goes back to the programme.

When a microarchitecture reorders instructions for efficiency, it sometimes has difficulty scheduling what's coming in next, because it doesn't know if what it needs to do next will depend on something that's being worked on. In other words, because it's messing around with the order of instructions, it doesn't know if the data it needs for the next instruction isn't going to be there until it comes out of the pipeline. In our example above, assume that Z is dependant on the output from X. If that's the case, the microarchitecture can't bundle Z into the pipeline for working after Y because it won't have all the data it needs.

However, it could be the case that Z actually has no dependency on X. In this case, it could happily be bundled in straight after Y, keeping the processor ticking over as fast as possible.

In Pentium 4 and Pentium M microarchitecture, there's no way for the instruction scheduler to know if what it's working on next is going to be dependent on data. However, in Core, an intelligent algorithm takes a 'best guess' about whether or not it can move on to load the next set of instructions. If it's right, the pipeline is kept full and the maximum number of instructions are executed simultaneously, making for faster performance. If, however, it turns out to be wrong: well, it can just wait for the previous instruction to finish up and try again.

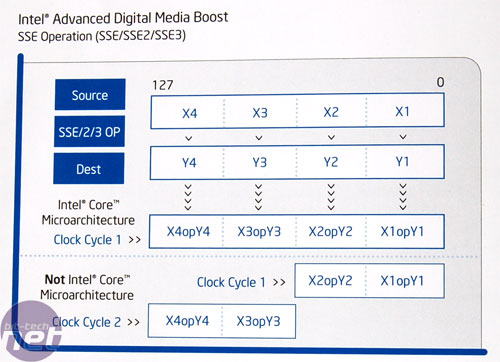

Digital media boost

We mentioned previously that SSE is an extension to the standard x86 instruction set. It is designed, primarily, to replace x86 instruction lists with more efficient SSE instructions that do the same thing, quicker. Typically, these instructions are in the areas of video, image acceleration, encryption, things like that.SSE instructions are 128-bit. Previous Intel processors have only be able to handle 64-bits at once, meaning that it took two clock cycles to execute one SSE instruction. However, with Core, these instructions can now be done one instruction per one clock cycle, because Core can handle 128-bit SSE instructions. This effectively makes for double the SSE performance.

Final thoughts

Phew, that was fairly hefty. Hopefully this gives you a good idea of how Core differs from other chip microarchitectures, and how Intel is expecting to regain the performance crown this year. Getting to the bottom of technology like this is always difficult, but rewarding - we hope you've finished up a little more enlightened than when you starting reading! We're certainly enormously excited about the potential that Core has to turn the enthusiast world on its head when Conroe launches later this year. Stay tuned to bit-tech for all the information we can get our hands on.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.